The Fundamentals of Big Data Engineering

Big data has revolutionized the way data is processed and analyzed. It has become a fundamental part of enterprise architecture, providing insights that help companies make better-informed decisions. With the vast amount of data generated every day, it has become crucial to have the right data engineering skills and processes to handle this data. In this article, we will explore the fundamental concepts of big data engineering, including the tools and technologies used to process and analyze big data.

What is Big Data Engineering?

Big data engineering refers to the process of processing, storing, and analyzing large amounts of structured and unstructured data. It involves designing and implementing systems that can handle data at scale, and converting raw data into insights that can be used to make informed decisions. Big data engineering is a multidisciplinary field that brings together skills from computer science, statistics, and business analysis.

Key Concepts in Big Data Engineering

Data Processing

Processing big data involves breaking down large datasets into smaller, more manageable chunks. This can be done through a variety of techniques, including parallel processing, distributed computing, and real-time data processing. The goal of data processing is to make data more manageable, faster to access, and easier to understand.

Data Storage

Big data requires storage solutions that can handle vast amounts of data efficiently. Traditional databases are often unable to handle the volume and variety of data generated in modern-day applications, and thus, NoSQL and distributed storage solutions have become popular. Popular data storage technologies include Hadoop Distributed File System (HDFS), Cassandra, and MongoDB.

Data Analytics

Data analytics involves transforming raw data into useful insights that can be used to make decisions. This involves applying statistical and analytical techniques to large datasets to identify patterns, trends, and anomalies. Big data analytics involves specialized tools and technologies such as Hadoop, Spark, and Hive.

Machine Learning

Machine learning involves using algorithms to make predictions based on data. It is used extensively in big data applications, including natural language processing, image recognition, and predictive analytics. Popular machine learning frameworks include Tensorflow, Keras, and PyTorch.

Big Data Engineering Tools and Technologies

Hadoop

Hadoop is an open-source framework designed for distributed storage and processing of large datasets. It comprises Hadoop Distributed File System (HDFS) for storage and MapReduce for processing. Hadoop is highly scalable and has been used by companies such as Yahoo, Facebook, and LinkedIn for big data processing.

Category: Distributed System

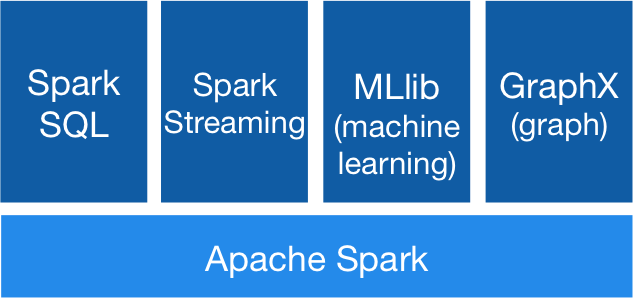

Spark

Apache Spark is another open-source big data processing framework. It provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. Spark supports a variety of languages, including Scala, Java, and Python and provides a range of libraries for machine learning, SQL, and graph processing.

Category: Distributed System

Cassandra

Cassandra is a highly scalable, distributed NoSQL database. It is designed to handle large amounts of data and is used extensively in big data applications. Cassandra is known for its fault tolerance, high availability, and ease of scalability.

Category: Database

MongoDB

MongoDB is a popular NoSQL database that is known for its scalability, performance, and ease of use. It supports a range of data types and allows for horizontal scaling through sharding.

Category: Database

Tensorflow

TensorFlow is an open-source platform for building machine learning models. It supports a range of models, including neural networks, deep learning, and linear regression. TensorFlow provides a range of APIs for building, training, and testing models.

Category: Frameworks

PyTorch

PyTorch is a machine learning library that is gaining popularity in the data science community. It provides a range of APIs for building, training, and testing machine learning models, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

Category: Frameworks

Keras

Keras is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano. It provides a range of APIs for building, training, and testing neural networks.

Category: Frameworks

Conclusion

Big data engineering is a